Artificial intelligence (AI) is reshaping recruitment. From parsing CVs to shortlisting candidates, AI-driven tools are saving hiring teams hours of manual effort. But beneath the surface lies a critical concern: bias in AI recruitment.

While these technologies promise neutrality, research shows that AI systems can perpetuate or even amplify human biases, especially when not rigorously tested or ethically trained. For recruitment agencies, using AI software to screen CVs/candidates, which handle thousands of candidate profiles across sectors and geographies, ensuring fairness in automated hiring is no longer a nice-to-have; it’s a business imperative for companies.

The broader and more representative the data used to train the AI, the fairer the outcomes.

How Does Bias Enter AI Recruitment Tools?

AI recruitment tools learn from historical hiring data. If past hiring decisions were biased, consciously or unconsciously, AI systems trained on that data may replicate those patterns. For instance:

- An AI tool trained on resumes from a historically male-dominated sector may prioritise male candidates.

- Tools trained on Western naming conventions may inadvertently score ethnic-sounding names lower.

- Language models used in screening may misinterpret colloquialisms or regional expressions.

This is known as algorithmic bias, when the data or logic driving a tool embeds skewed or unfair preferences.

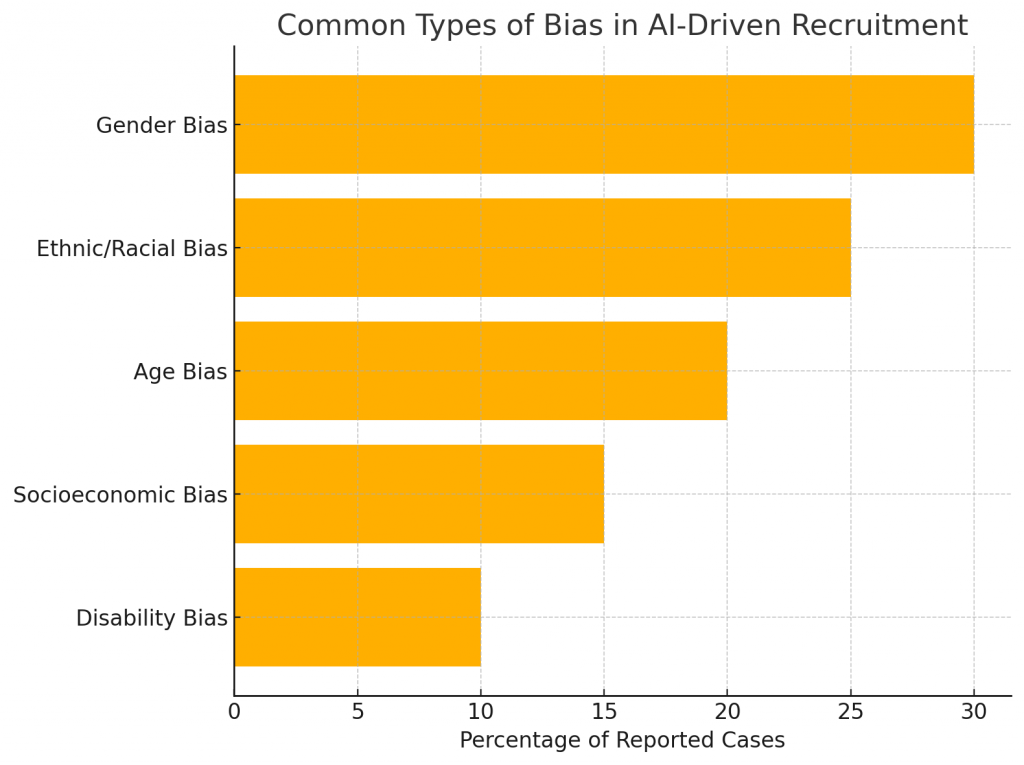

Types of Bias in AI Hiring

Here’s a visual breakdown of the most common forms of bias found in AI-driven recruitment systems:

Real-World Cases: When AI Went Wrong

1. Amazon’s CV Screening Tool

In 2018, Amazon scrapped its AI resume screening tool after discovering it penalised female candidates. The system had been trained on ten years of tech hires, mostly men, resulting in gender-biased outcomes.

2. HireVue’s Video Interviews

Facial expression and tone analysis in video interviews raised red flags for introducing racial and disability-based bias. Under regulatory pressure, HireVue eventually removed the facial analysis feature from its product.

These cases underscore the risk of relying solely on opaque, automated processes for crucial decisions like hiring.

Ensuring Fairness: What Recruiters Should Look For

1. Audit the Algorithms

Recruitment teams must ask AI vendors for transparency reports:

- What data was the model trained on?

- Was the model tested for demographic fairness?

- Are there explainability tools available?

2. Use Diverse Training Data

The broader and more representative the data used to train the AI, the fairer the outcomes. Recruitment agencies should work with vendors who use diverse candidate sets and regularly update training data.

3. Complement AI with Human Oversight

AI can enhance, not replace, human judgment. Fair hiring requires a hybrid approach, where recruiters validate AI-generated recommendations and bring context into decision-making.

4. Monitor Outcomes Over Time

Bias isn’t always visible upfront. Long-term tracking of:

- Candidate demographics at each recruitment stage.

- Hiring success rates by gender, ethnicity, and disability.

- Candidate feedback on fairness.

…can reveal hidden patterns and enable course correction.

Regulatory and Ethical Developments

Both the EU AI Act and the UK’s Equality Act 2010 have implications for AI-based hiring practices. The EU’s legislation classifies recruitment tools as high-risk AI, meaning vendors and employers must ensure transparency, non-discrimination, and human oversight.

Ethical hiring is not just a legal issue; it impacts employer branding, candidate trust, and long-term DEI (Diversity, Equity, Inclusion) outcomes.

The Recruiter’s Role in the Future of Fair AI

At PE Global, we believe recruitment professionals are vital in steering the ethical use of AI. By partnering with responsible tech providers, staying updated on legal changes, and centring fairness in hiring policies, we can create a system that is both efficient and equitable.

AI can help recruiters cast a wider net and reduce unconscious bias, but only if it is designed and deployed responsibly. As the future of hiring becomes increasingly automated, let’s ensure it remains fundamentally human.

Finally, for more details on PE Global’s recruitment solutions, contact the team via email at queries@peglobal.net or call them at +353 (0)21 4297900.